What are the key metrics for measuring success in generative search?

The key metrics for measuring success in generative search include citation frequency (how often AI models reference your content), brand visibility in search results, share of voice for brand mentions, and the extent to which you dominate "query fan-out" topics. These metrics form the core pillars that help you understand how LLM systems valuate and reference your content.

As LLM-based search transforms how users find information, marketers need new ways to measure digital performance.

This guide explains important metrics for tracking success across generative search channels.

How do you measure citation frequency in AI search results?

Citation frequency measures how often AI models reference your website or domain when generating responses. This metric has become a key KPI as AI systems increasingly influence user decisions and employ a "query fan-out" technique to perform multiple searches in a single run on behalf of the user. To track citation frequency effectively:

- Count direct domain references in AI-generated responses across LLM engines (e.g., ChatGPT, Perplexity, Microsoft Copilot, Google AI Overviews).

- Track the LLM search queries (“query fan-out”) that are driving your citations.

- Monitor the trend line of citation frequency across prompts and questions your target audience is asking.

The accuracy of these citations matters just as much as frequency. AI systems sometimes misattribute information or provide outdated references, so monitoring citation accuracy helps maintain brand integrity.

What is AI Brand Visibility and how do you track it?

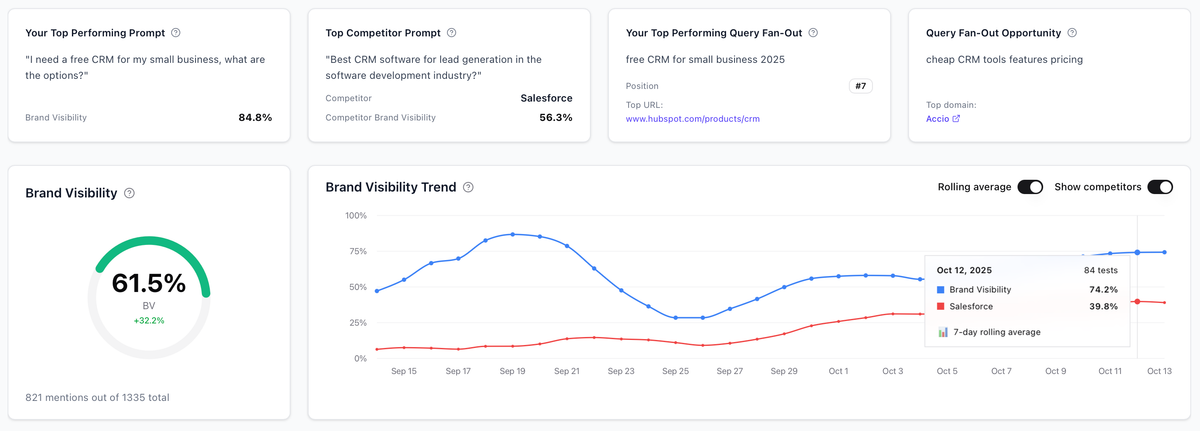

AI Brand Visibility refers to how often your content appears in AI-generated search results and responses. This metric partly replaces traditional search rankings, as AI systems change how information is discovered and consumed, leading to fewer clicks to websites, since users often receive their answers directly within LLM responses.

The formula for calculating AI Brand Visible:

AI Brand Visibility (%) = (AI responses mentioning your brand ÷ total AI responses across prompt queries) × 100

Key components and ways for using AI Brand Visibility include:

- Identify content clusters (prompt + query fan-out) where your brand already has high visibility. Defend these positions by publishing additional supporting and high-authority content.

- Identify content clusters where competitors are outperforming you. Benchmark their top content based on citation frequency, and create high-value, in-depth pieces to outperform the competition.

- Platform reach: Measure your visibility across AI search engines relevant to your business and target audience (e.g., as of October 2025, Mistral is gaining traction in the B2B sector in Europe).

- Track AI visibility: Identify industry-related queries across AI platforms and monitor when your brand or content appears.

Track daily changes in your position and test the same queries monthly to record shifts in visibility patterns. Many modern platforms (such as Superlines, Profound, or Athena HQ) allow you to effectively test queries across AI channels.

Learn more about tracking brand visibility in this article.

What is AI Share of Voice and How Do You Track It?

AI Share of Voice (AI SOV) measures how prominently your brand is represented in AI-generated search results and responses compared to competitors within your industry or topic space. It reflects your brand’s relative presence. Not just how often you’re mentioned, but how your visibility compares to others in generative AI space.

As LLMs increasingly serve as discovery engines, AI Share of Voice has become a key metric for understanding brand competitiveness in the new search landscape. It helps marketers answer questions like:

- How often does our brand appear compared to our top competitors?

- Which topics or queries are driving the most brand exposure in AI-generated results?

- Are we gaining or losing share as AI systems evolve?'

How to Track AI Share of Voice?

Identify your competitive set:

Select 3–5 key competitors relevant to your brand’s main content clusters or industry topics.

Collect AI-generated responses:

Run standardized prompts across major LLM engines (e.g., ChatGPT, Perplexity, Microsoft Copilot, Google AI Overviews) to capture brand mentions and contextual references.

Calculate your AI SOV:

Your brand's AI Share of Voice (%) = Mentions of your brand / Total mentions of competing brands ×100

How to use AI Share of Voice?

Monitor trends over time:

Track changes weekly to identify shifts in visibility and competitive positioning across different AI platforms.

Analyze context and sentiment:

Evaluate how your brand is being mentioned: is it cited as a trusted source, a recommendation, or a secondary reference? Qualitative context is key to interpreting raw SOV data.

Pro Tip: Combine AI Share of Voice with Citation Frequency and Brand Visibility metrics to get a 360° view of how generative AI systems understand, rank, and represent your brand.

How do you measure user engagement in generative search?

User engagement in generative search differs from traditional web analytics. Instead of measuring clicks and page views, focus on how users interact with AI-generated content featuring your brand.

Key engagement metrics include:

- Follow-up questions: Users asking for more information about your brand

- Source verification: Users clicking through to verify AI-provided information

- Brand searches: Increased direct searches for your company after AI exposure

- Conversation depth: Extended AI conversations mentioning your content

Track these metrics along with AI search key metrics using a combination of brand monitoring tools, direct traffic analysis, and social listening platforms. Look for patterns in user behavior following AI interactions with your content.

What is content freshness and why does it matter for AI search?

Content freshness measures how current and up-to-date your information remains. AI systems often prioritize recent, accurate information when generating responses.

Maintain content freshness through:

- Regular content updates and revisions

- Timely publication of industry news and trends

- Fact-checking and updating outdated information, as well as internal and external links and sources.

- Addition of recent examples and case studies

- Integration of current statistics and data points

AI systems can access publication dates and update frequencies, using this information to weight content relevance. Fresh content typically receives more citations and better positioning in AI responses.

Monitor content freshness by tracking when AI systems reference your most recent content versus older material. If citations consistently point to outdated information, prioritize content updates.

What metrics should you avoid when measuring generative search success?

Some traditional SEO metrics provide limited value for generative search optimization. Understanding what not to measure helps focus efforts on meaningful indicators.

Metrics with reduced relevance in AI search are:

- Organic click-through rates: Many AI interactions don't generate clicks

- Page views: AI systems can extract value without user visits

While these metrics still have value for overall digital strategy, they don't accurately reflect generative search performance. Focus measurement efforts on AI-specific indicators that directly correlate with business outcomes.

Instead, prioritize metrics that show how AI systems understand, reference, and present your content to users seeking information in your industry.

How do you create an effective generative search measurement framework?

Building a comprehensive measurement framework requires combining multiple metrics into a cohesive tracking system. This framework should align with business objectives while providing actionable insights.

Essential framework components:

1. Baseline establishment: Document current AI visibility and citation patterns

2. Regular monitoring: Set up consistent tracking schedules

3. Competitive analysis: Compare performance against industry peers

4. Goal alignment: Connect metrics to business outcomes

5. Reporting structure: Create clear dashboards for stakeholders

Start with 3-5 core metrics rather than trying to track everything immediately. Expand measurement scope as you develop expertise and identify which metrics most strongly correlate with business success.

Regular review and adjustment of your measurement framework ensures it remains relevant as AI search technology evolves and new platforms emerge.

How Superlines Helps Marketing Teams navigate AI Search

Superlines helps content teams track, analyze, and optimize visibility across both Google and AI search engines in a single workflow, turning dual optimization into a measurable, repeatable process.

The Search experience is changing fast and the marketing success now depends on how well AI systems understand, reference, and present your content to users. The most important metrics focus on citation frequency, AI Brand Visibility, and Share of Voice.

To track your visibility in AI search effectively, tools like Superlines provide insights into where your brand appears in AI-generated answers and how to optimize for better performance.

With Superlines, you can:

- See which pages are already cited in ChatGPT, Perplexity, or Gemini.

- Identify which entities or topics are missing from your visibility footprint.

- Get recommendations for how to structure content for both crawlers and LLMs.

- Monitor performance trends across AI engines and traditional search in one dashboard.

Instead of manually guessing which updates improve visibility, Superlines gives marketing teams clear, data-driven insights about what to prioritize next.

This transforms AI Search from an experimental idea into a measurable, scalable marketing channel.

Start optimizing for visibility inside answers, not just rankings, and you’ll define how your audience discovers you. Get started today!